Counting Vulnerabilities

When talking with infosec professionals about gaining insight into how vulnerable their organisations are, I’ve noticed that many often reply with one simple statement: “We have N number of vulnerabilities.” Whilst it’s trivial to count the actual number of vulnerabilities using a vulnerability scanner, the measure by itself doesn’t give any accurate insight into how effective the assessment really is. Telling an organisation that they have 10,324 vulnerabilities, whilst shocking, doesn’t convey the actual risks faced. It also fails to give a clear view of how well a foundational control like vulnerability management has been operationalised within the infrastructure.

Counting is not enough

Consider for a moment why the total vulnerability count is a poor metric:

- A total count without any context doesn’t convey how many devices were scanned. Just quoting a number like 10,324 could mean that you have only scanned 1% of the systems within your environment (which are horrendously vulnerable) and still have no idea about the 99% of other assets communicating with your network.

- A total count doesn’t take the criticality of the vulnerabilities into consideration. Not all vulnerabilities are the same; some are low level and can be dismissed, whereas others are hugely important and could easily lead to data loss.

- A total count doesn’t take into consideration how critical the vulnerable assets are to the business. Faced with patching a non-critical system with 10,324 vulnerabilities or a business critical system with only one vulnerability, many would choose to remediate the non-critical asset since it would have the biggest impact on drawing down the total number of vulnerabilities.

- The total count will surely increase as you scan more devices in depth, which could drive the wrong behaviour. Credentialed scanning is critical to understanding the true vulnerable state of a device so its use should be encouraged not deterred by a flawed metric.

- IT operations and IT security will quickly begin to feel that they are always trying to climb an insurmountable mountain. No matter how quickly they push out patches, the situation only continues to get worse as more scan results are piled on top. This could lead to apathy or lack of buy-in from the operations and patching teams.

The total vulnerability count is a poor metric

I could go on, but I hope you’re getting the picture. So what should be measured instead?

Consult the 20 Critical Security Controls

My preference is always to turn to best practices rather than re-inventing the wheel. If we take a look at the Council on CyberSecurity’s (formerly from SANS) Critical Security Controls Number 4: Continuous Vulnerability Assessment and Remediation, it has some excellent metrics to adapt. Picking from the list of effectiveness and automation metrics, for me the two that demonstrate the best view on how well vulnerability management has been operationalised within the environment are:

How long does it take, on average, to completely deploy application or operating system software updates to a business system (by business unit)?

Or in simpler terms: “What’s our average patch rate?”

What is the percentage of the organization's business systems that have not recently been scanned by the organization's approved, SCAP compliant, vulnerability management system (by business unit)?

Or in simpler terms: “What’s our scan coverage?”

Understanding how quickly vulnerabilities can be patched in the environment helps gain visibility into how efficient the patch process works. Once you’ve identified vulnerabilities, how quickly will the operations team respond and patch accordingly? To break this down further, I always suggest that organisations prioritise the vulnerabilities by criticality of asset affected and the impact of the bug through CVSS (Common Vulnerability Scoring System), utilising the base and temporal metrics that are built into it. Knowing that an easily exploitable vulnerability on an Internet facing or business critical system will be patched by default in a certain number of days builds confidence in the process whilst also showing operational improvements against a target that is achievable and demonstrable.

The Council on CyberSecurity’s Critical Security Controls have some excellent metrics to adapt

With regards to the second metric for scan coverage, this piece of information is of huge importance when understanding how well a foundational control like vulnerability management has been deployed. Highlighting the fact that whilst a 10,000 IP license was purchased, only 5 IPs are being scanned identifies issues and encourages the right behaviour. Breaking this number down can also be more effective. For example, 100% of business critical and Internet facing systems should be scanned within X timeframe using credentials, whereas non-critical systems should be scanned in Y timeframe without credentials.

SecurityCenter tools

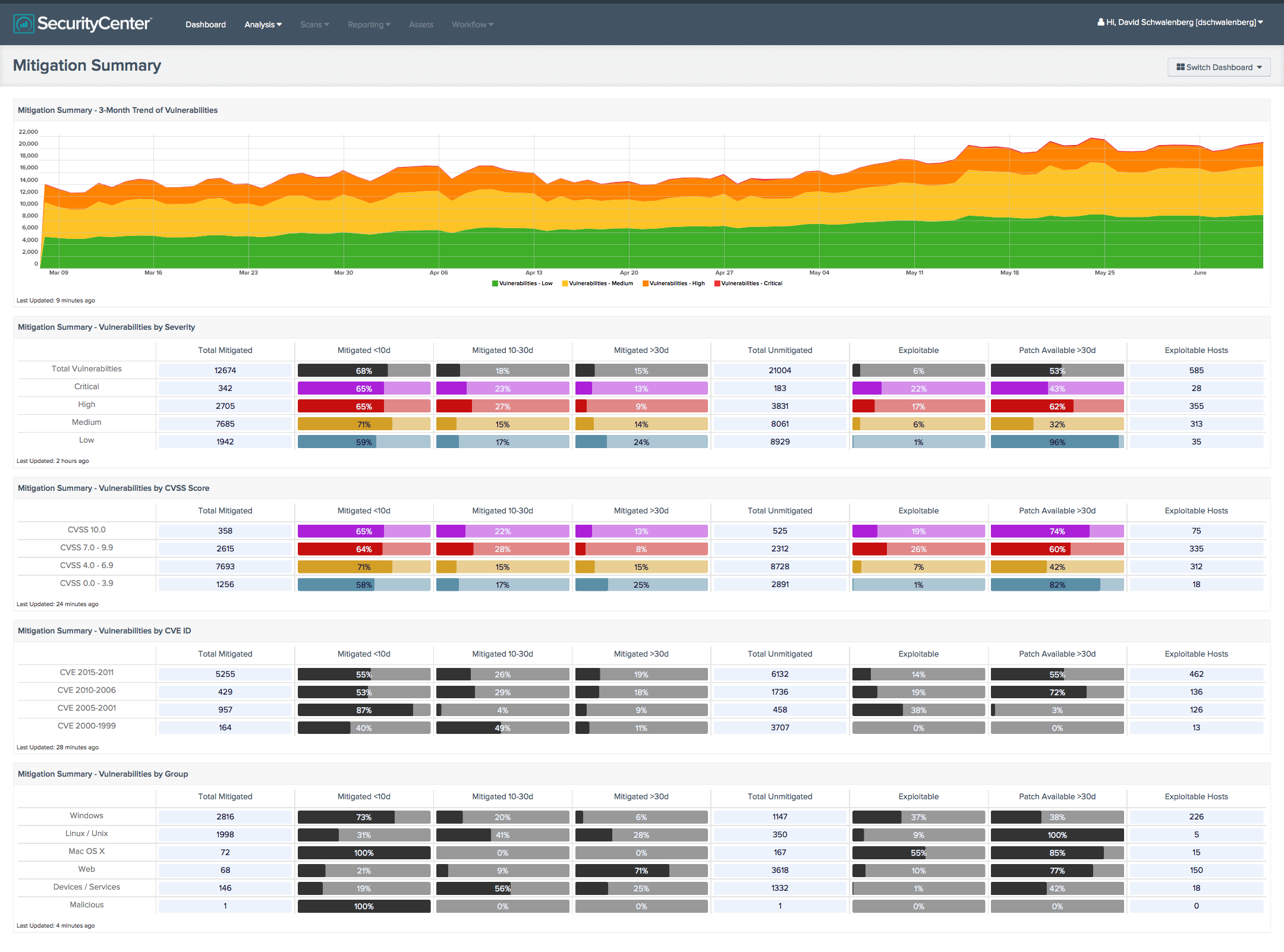

For SecurityCenter™ customers, being able to bubble up this information is very easy to do and examples are included in the Dashboard feed. For example, the Mitigation Summary Dashboard includes useful visibility into your patch rate:

and the Council on CyberSecurity 20 Critical Security Controls Dashboard includes visibility into scan coverage:

Our research team is always adding new dashboards to SecurityCenter; check the feed regularly for new metrics that provide insight into the effectiveness of your vulnerability assessment program.

- Metrics

- SANS

- Vulnerability Management